Photo by Sai Kiran Anagani on Unsplash

Linux Shell Scripting - 6 Popular Real World Use Cases

Linux Shell Script

Automate through Shell Scripting - your daily sys-admin work

Hi everyone! hope you get excited. Today we are going to see and learn 5 real-world use cases where we can automate our day-to-day boring system admin job through scripting. So let's get started.

Prerequisites :

Basic understanding of Linux Commands i.e. awk, sed, grep, df -H, uptime etc.

Basic concepts of Bash Shell Scripting - for and while loops, if-else logic etc.

So let's get started

Problems:

Calculate the server uptime and restart the server if the uptime cross certain days.

Disk Space calculation and set/send alert for 90% usage of any partition/s

Rotating Log Files manually after generated logs.

Stop an unwanted process if it's running on the server

Taking backup Automatically of any file/ multiple files in a single time using crontab.

Shell Script to automatically delete backup after certain days using crontab.

Solution 1 - Calculate the server uptime and restart the server if the uptime is more than 15 days.

\=> so here we have to calculate the server uptime with the uptime command and piping the output to awk command to get the exact uptime value and with the help of if logic operator, we can check if server uptime is greater than 15 days then the server will reboot with /sbin/shutdown -r +30 command some buffer time.

and then copy the file to crontab let's see the code below:

#!/bin/bash

#to check the updtime and print through the powerfull AWK command

up=$( /usr/bin/uptime | awk '{print $3}' )

#always print your variable to check if the command run successfully

echo $up

#user input can be taken also

days=15

#logical operator to check the server uptime

if [ $up -ge $days ]

then /sbin/shutdown -r +30

# +30 for extra 30 min to shutdown, that will not harm your server if any process running it will get time to stop.

fi

exit 0

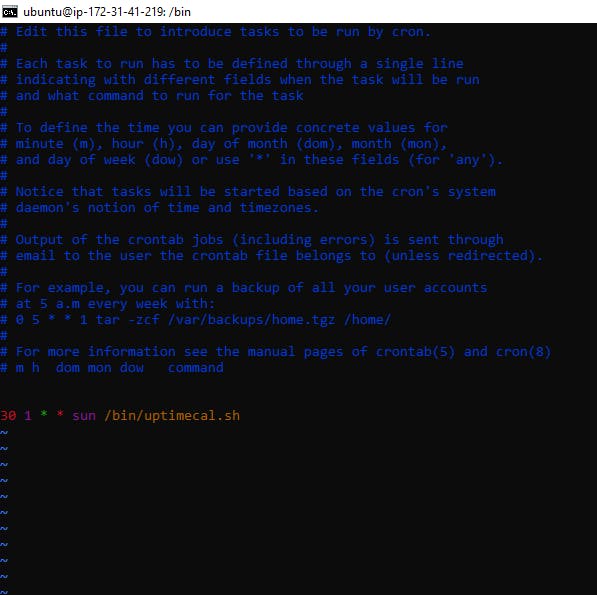

then change its permission chmod 755 and save this file in /bin folder and then go to crontab edit with 30 1 * * sun /bin/filename , This crontab means this file will get executed every Sunday at 1:30 AM every week of every month or you can Automate it as per your requirement as shown below.

Crontab scheduler gives three ways to modify itself. Choose any one. The Crontab file opens up. Go to Crontab and specify the command to be executed in following syntax:

Syntax: (Minute) (Hour) (Date) (Month) (Day of Week) (Command)

Minute: The minute at which the command is to be executed. It takes a value from 0 to 59.

Hour: The hour at which the command is to be executed. It takes a value from 0 to 23.

Date: Date of the month (1-31)

Month: Month of the year (1-12)

Day of the Week: It takes a value from 0 to 6.

0: Sunday

1: Monday etc.Command: Command to be executed

Example: If we want to run our first script in every Sunday at 1:30 AM in every week of every month (as specified earlier)

This concludes our first automation. Happy !! let's move to next.

Solution 2 - Disk Space calculation and set/send alert for 90% usage of any partition/s

\=> Let's understand the problem first - so here we have to calculate the disk space and we have to check if any partition goes above the threshold value then send an alert to the user of that system.

So we can print the Disk space usage with df -H command and then pipe the output to grep -vE '^Filesystem|tmpfs|cdrom' and then to awk '{print $1, $5}'

and then to sed -e 's/%/ /g' . After that, we will pipe that output to the While loop to read the whole output.

#!/bin/bash

echo "Enter a Threshold Value in Number: "

read threshold

df -H | grep -vE '^Filesystem|tmpfs|cdrom' | awk '{print $1, $5}' | sed -e 's/%/ /g' | while read newdata;

do

used=$(echo $newdata | awk '{print $2}') #to store the Disk usage value

partition=$(echo $newdata | awk '{print $1}') #to store the Partition name

if [ $used -ge $threshold ]

then

echo "WARNING: The partition \"$partition\" has used $used% of total Space at - $(date)"

fi

done

Few Points to remember:

Here

df -Hwill generate the Diskspace outputgrep -vE '^Filesystem|tmpfs|cdrom'= to list those rows which don't have the Words like Filesystem etc. -v is the tag to do so with Grepawk '{print $1, $5}'= to print the customized value only from those rows generated from the grep commandsed -e 's/%/ /g'= to replace the "%" char with whitespace " ".here I have taken user input, you can hard quote the value to threshold Disk Space as per company rules.

This script can be easily executed as cron jobs as in the previous example and automate tasks as per requirement.

Solution 3 - Rotating Log Files manually after generated logs (if the file is going big).

\=> What is meant by log rotation?

In information technology, log rotation is an automated process used in system administration in which log files are compressed, moved (archived), renamed or deleted once they are too old or too big. Link

Here we are implementing a manual process through a scripting method. so what we are doing here -

First, we put the file path and check with the

if [ ! -f $f ]logic if the file exits or not if exited then it will create a file with the same name, if not existed then it will create the named file.Now if the log file existed in real then the control of this script will move to the next part and two variables

MaxSize=$((4096*1024))andsize=`du -b ${f} | awk '{print $1}'`will get assigned and next, if logic we will check if the existing log file's size is bigger than the specified size then the log file will be moved to a new file with the name as${f}.$timestampand then again an empty file will be created with the previous name (as that was moved)touch ${f}

#!/bin/bash

f="/home/deployment_data/apachedeployment.log"

if [ ! -f $f ]

then

echo "$f does not exist, Creating a file this name"

exit

fi

touch ${f}

MaxSize=$((4096*1024))

size=`du -b ${f} | awk '{print $1}'`

if [ $size -gt $MaxSize ]

then

echo "Rotating! the logs to new file"

timestamp=`date +%s`

mv ${f} ${f}.$timestamp

touch ${f}

fi

Note: You can automate this script anytime with help of Crontab editing as shown in the first example

Solution 4 - Stop an unwanted process if it's running on the server

\=> So as a sys-admin you need to stop unwanted processes every week or day. So why don't we try a scripting power to do so? Let's get started then!

So in the below code snippet, we have declared a process_ID variable to store the PID of the required process which you want to kill.

#!/bin/bash

process_ID=$(ps -ef | grep <process_name> | grep -v grep | awk '{print $2}')

echo "Process ID is : $process_ID"

if [ -n "${process_ID}" ]; then

echo "Stopping the process with ID: $process_ID"

kill -9 $process_ID

fi

ps -ef= It will show the all process running in the system now.grep <proces_name>= so need to grep it by the process name (as per your requirement)grep -v grep= as grep is also a process so it will be in the output, so we need to grep out the grep word also.kill -9 $process_ID= command to kill any process ( it required PID as the argument)

Solution 5 - Taking backup Automatically of any file/ multiple files in a single time using crontab.

\=> in any IT/ Software Industry taking backup of your daily work needs to be taken and we can do it by cp or mv command but that's not the correct way. As we are in the world of automation. So what we will do, we will create a script and with help of crontab, we will automate the script execution, let's say every day at 8 PM.

Excited enough !! let's see the magic. But before jumping into the Code create a Backup folder : mkdir backupfolder

#!/bin/bash

tar -cvf /backupfolder/filename.tar /home/jaydeep/scripts_folder/Imp_Scripts

#Go to the backup folder location

cd /backupfolder

ls -lrt

#Show the size of the folder

du -sh

So above is the basic code for taking daily backups. now let's understand a few jargons below

What is TAR ? = it is a compression tool that will zip our files/folders into a zip file and store it in the destination directory, full name as Tape Archive tool for compression

The basic Syntax for tar is like this:

tar -cvf /{absolute path to destination folder}/filename.tar /{absolute path of files to be compressed}/Flags used here as - c,v,f

Flag -c = to specify the Creation of a tar file

Flag -v = to print Verbose of the tar file creation, i.e., it prints all the file compression progress to the Shell Command line

Flag -f = to access all the files in the specified path.

Even we can write a Shell Script to take backup of multiple folders in one compressed file

\=> main code will be the same just the tar syntax will be changed here like below:

tar -cvf /{destination path}/filename.tar {path to folder 1} {path to folder 2} ...

#!/bin/bash

f1="/home/jaydeep/scripts/folder1"

f2="/home/jaydeep/scripts/folder2"

f3="/home/jaydeep/scripts/folder3"

#Creating same backup tar file for all specified folders

tar -cvf /backupfolder/backup.tar $f1 $f2 $f3

#Go to the backup folder location

cd /backupfolder

#Show the size of the folder

du -sh

- Here you can add Timestamp to create the tar file name more accurately as per best practice

edit the first part of the scripts as

filename="backup_`date +%d`_`date +%m`_`date +%Y`.tar";

# Create compressed file using tar and move to backup folder

tar cvf /backupfolder/$filename /home/jaydeep/scripts_folder/Imp_Scripts

Actually, the "date" is a bash keyword that stores date with parameters day, date, month, time, timezone and year.

date +%d gives thecurrent date.date +%mgives the current month.date +%Ygives the current year.

Note: the Backups script should be changed with chmod to be executable and run these with crontab to achieve the automation (as shown in first example)

Solution 6 - Shell Script to automatically delete backup after certain days using crontab.

Taking backup daily improves the security of the data and makes easy to recover but this requires a lot of space and computing resources like space are limited and expensive.

Thus, it is ideal to delete backups after certain days because latest backups are more important and updated.

Here, we can write a shell script to automate that.

#!/bin/bash

find "/backupfolder" -type f -mtime +30 -exec rm {} \;

find: to search the specified directories and performs the command specified after it.

-type f: searches for files in the specified folder

-mtime: tells Linux to search files with timestamps in terms of date. Here, +30 indicates to search the files older than 30 days.

exec: command is executed if files match the above criteria.

rm: command is used to delete the matching files

{}: fetches all the matching files of the find command and serves input to the rm command one by one.

Note: the above script should be changed with chmod to be executable and run these with crontab to achieve the automation (as shown in first example)

So here all solutions are made through scripting power, implement these and if you like please let me know and follow me in my social media.